Prevent sensitive data exposure attacks

This section delves into sensitive data exposure risks, how attackers use Random Fuzzing/Fuzzer programs to exploit such risks and various best practices and tools to mitigate such risks in modern application delivery.

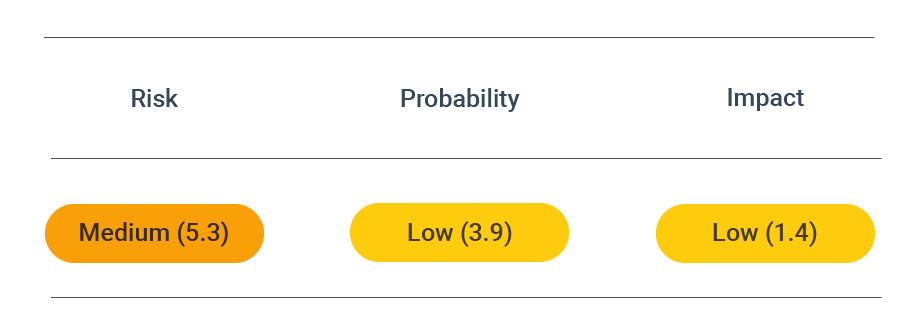

Security assessment

CVSS vector: AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:N/A:N

Vulnerability information

Sensitive data exposure is associated with how teams handle security controls for certain information. Missing or poor encryption is one of the most common vulnerabilities that lead to the exposure of sensitive data. Cybercriminals typically leverage sensitive data exposure to get a hold of passwords, cryptographic keys, tokens, and other information they can use for system compromise. Some commonly known flaws that lead to the exposure of sensitive data include:

Lack of SSL/HTTPS security on websites

As web applications gain mainstream use for modern enterprises, it is important to keep users/visitors protected. SSL certificates encrypt data between websites/applications and web servers. Organizations with misconfigured SSL/HTTPS security risk compromising the privacy and data integrity of the user since it can easily be intercepted in transit.

SQL Injection Vulnerabilities in databases

Without proper security controls, attackers can exploit malicious statements to retrieve the contents of a database. This allows them to create SQL statements that let them perform various database administration actions. Hackers can retrieve sensitive information, such as user credentials or application configuration information, which they use to penetrate further and compromise the system.

Prevent attacks

Exposure to sensitive data results in massive remediation expenses and an eventual loss of reputation for the affected organization. It is, therefore, important to enforce a strong, organization-wide culture to prevent sensitive data exposure.

The following section outlines the best practices and tools that can be used to prevent sensitive data exposure.

Identify and classify sensitive data

It is important to determine and classify sensitive data with extra security controls. This data should then be filtered by the sensitivity level and secured with the appropriate security controls.

Apply access controls

Security teams should focus their energy on the authentication, authorization, and session management processes by provisioning a robust Identity and Access Management (IAM) mechanism. With the right access controls in place, organizations must ensure that only the intended individuals can view and modify sensitive data.

Perform proper data encryption with strong, updated protocols

Sensitive data should never be stored in plain text. It is important to ensure that user credentials and other personal information are protected using modern cryptographic algorithms that address the latest security vulnerabilities.

Store passwords using strong, adaptive, and salted-hashing functions

Given the advancement of security controls, attackers have also devised clever ways to retrieve passwords. For instance, a hacker can use a rainbow table of precalculated hashes to access a password file that uses unsalted hashes. Salted hashes enhance password security by adding random inputs to a hash function, guaranteeing a unique output, and are thus recommended over unsalted hashes.

Disable caching and autocomplete on data collection forms

While caching and autocomplete features help improve user experience, they contain security risks that may attract attackers. Hackers may rely on a user browser to easily log in to an account since the autocomplete feature fills in the credentials.

Caching stores sections of web pages for easier loading in subsequent visits, which allows attackers to use it to map out user movements. Attackers also use cache data to tailor malware. As a best practice, it is recommended that caching and autocomplete of forms are disabled by default and only activated as needed.

Minimize data surface area

Security teams should reduce the data attack surface area of a system by considering careful API design, ensuring only the bare minimum amount of data is included in server responses. While doing so, it must also be ensured that the server response does not expose information about the system configuration. Random testing and Data filtering should also be performed at the server-side to reduce the risk of attackers intercepting sensitive data in unfiltered traffic in transit.